AI is no longer a tool – it has become a full-scale attack surface.

With the rise of Copilot agents, autonomous LLM workflows and enterprise AI pipelines in 2026, the familiar security landscape has been rewritten. Identity, data protection, DLP, Zero Trust and DevSecOps now require an entirely new architectural lens.

This eight-part series explores how modern AI systems actually behave, the risks they introduce, and the engineering patterns that allow organisations to stay ahead when a model holds user tokens, tools, context and a level of operational speed no human can match.

Across the series, we will examine:

• emerging threats and the new MITRE ATT&CK-AI framework

• data risks highlighted in the Digital Defense Report 2026

• Purview’s role as the enterprise “AI control plane”

• secure agent and sandbox architectures

• token cryptography and identity attestation

• protection of toolchains, pipelines and vector stores

• safe-output engineering and exfiltration controls

• the complete blueprint for a secure enterprise-grade AI platform.

If your organisation is building or deploying AI, this series will show you how to stop your model from accidentally – or deliberately – burning the company down.

here is

**CHAPTER 1 / 8 —Microsoft’s 2026 Digital Defense Report DECODED**

Introduction: “Cybersecurity is dead. Long live cybersecurity.”

The Microsoft Digital Defense Report 2026 is not “just another PDF nobody reads.”

It’s an X-ray — and the diagnosis isn’t flattering. Enterprise networks increasingly resemble organisms with autoimmune disorders: internal processes devour internal data, and everyone pretends it’s normal.

And the best part? In 2026, Microsoft effectively admits (between the lines) that:

• attacks move faster than patches,

• models move faster than policies,

• AI agents are smarter than most SOC analysts,

• and human-driven data leaks are as eternal as GPL licences.

Enough poetry. On to the heavy engineering.

1. The 2026 Threat Landscape: the numbers nobody wanted to see

1.1. Collapse of legacy defensive models

According to MDDR-2026:

• 72% of successful attacks target the identity layer

• 54% exploit tokens (OAuth replay, refresh-token theft, silent-auth abuse)

• 31% bypass MFA (device-code phishing, AiTM, OAuth consent fraud)

• 19% are supply-chain compromises

• 90% of all threats involve some form of AI-augmented phishing chain

(Yes, the numbers exceed 100%. Welcome to modern statistics: one attack counts as three categories.)

1.2. Why identity has become the primary battlefield

The core 2026 issue: a token ≠ a human.

Most enterprise systems still behave as if “a token issued eight hours ago” equals a verified login.

AI-enhanced attackers do as they please:

-

intercept a token from a compromised application,

-

reuse it in another context,

-

bypass MFA via session stealing,

-

pivot into internal services,

-

perform lateral movement through legacy APIs.

All of this happens silently.

To the SOC, it looks like a “successful login.”

1.3. MITRE ATT&CK: the new 2026 techniques

Added or strengthened techniques:

• TA0001 Initial Access

– T1621: AI-assisted phishing kits

– T1650: Device Code Flow Interception

• TA0006 Credential Access

– T1606.003: OAuth token replay

– T1110.005: AI-generated credential stuffing

• TA0009 Collection

– T1560.003: Exfiltration via AI-assistant API output

• TA0011 Command and Control

– T1105.002: LLM-proxy tunnelling

MITRE has stopped pretending: AI is now used by both attackers and defenders.

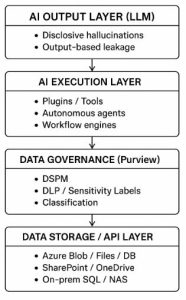

2. Emerging Data Risks: where the data actually leaks

2026 is the year enterprises accepted a difficult truth:

data leaks don’t happen because the SOC is bad —

they happen because AI models are too good.

2.1. Data Leakage via AI Inputs (Prompt-Based Exfiltration)

An attacker uses an internal AI agent.

Even if the agent accesses corporate data only through protected APIs, it can still leak information:

DLP reacts to files.

An AI model is not a file — it is a leakage channel.

2.2. Data Leakage via AI Outputs (Hallucination Leakage)

If your model was trained on corporate data — congratulations.

Anyone can now extract fragments through “creative” prompts, because the model:

• doesn’t know what is confidential,

• stores no access policy,

• doesn’t understand regulation,

• and desperately wants to be helpful.

Example:

No-one uploaded that text.

The model “assembled” it from internal patterns.

This is called disclosive hallucination — a new MDDR-2026 term.

2.3. Data Leakage via Plugins / Tools / AI Extensions

Copilot plugins (AI “tools”) are a gift to attackers.

If a tool has:

• database access,

• file-creation permissions,

• the ability to execute queries,

• the ability to send HTTP requests,

then an AI agent becomes a fully autonomous data-exfiltration bot.

Undetected.

3. Industrial Attack Patterns in the AI Era: R&D-Level Breakdown

3.1. Token Replay Attack (OAuth 2.0 / Entra ID / Copilot)

How the attack works:

-

Attacker obtains a refresh token

-

Uses it for future silent-flow authentications

-

Silent-auth issues new access tokens

-

Tokens allow copying data from Purview-scanned sources

-

Actions appear fully legitimate:

“User: same; Device: yes; MFA: yes (in the past)”

Why this is deadly in an AI context

AI agents often use Non-Interactive Token Refresh, meaning:

• no MFA

• no contextual evaluation

• no real-time CA policy

• no risk step-up

• no session attestation (before Entra 2026)

The AI agent becomes the attacker’s second identity.

3.2. Payload Injection through AI Agents → Internal Network

Enterprise AI agents can:

• run SQL queries

• search SharePoint

• generate CSVs

• produce PowerShell

• call ERP/CRM APIs

• automate Jira/Confluence

Which turns them into:

Universal Internal Exploitation Layer (UIEL)

Attacker plants a payload:

If the AI agent isn’t isolated — it executes malicious code.

3.3. Cross-Domain Injection (CDI) — a new Prompt Injection class

Goal: force an enterprise AI agent to perform actions across domains:

ERP → HR → Finance → Security

Example:

If RBAC isolation is weak — the agent does everything.

4. Data Governance Failure Modes (2026)

4.1. Purview DSPM reveals more risk than the SOC can handle

Microsoft reports:

• 68% of organisations don’t know where critical data physically resides

• 52% don’t know who actually has access

• 78% allow AI models to issue queries without DLP checkpoints

• 42% have “shadow data flows” created by AI tools

Purview DSPM in 2026 performs:

• automatic detection of sensitive data (PII/PHI/PCI/IP)

• exposure scoring

• RCA for AI outputs (“Where did the model get this text?”)

• leakage prediction via Purview Risk Simulation

The result is unpleasant:

internal risks constitute 62–70% of all potential leak points.

5. The AI Risk Stack (2026)

To be continued