Of the shaman are three hands

And a wing from behind his shoulder grows,

From the breath of him

A candle’s flame is born and glows,

And at times he knows himself,

Himself, no longer knows,

While his soul, flung open wide,

Is straining, sings, and overflows.

Of the shaman are three hands,

The world around, a darkened hall,

On the palms of gold are drawn

Unblinking eyes that see through all.

He sees the roseate dawn’s light

Before the sun itself can rise,

When it seemed he only slept

And knew naught beneath the skies.

Of the shaman are three hands,

A garden in the ruby rays,

From the breath of him is kindled, kindled…

0. Prologue: “AI is not a feature. It’s a new attack surface.”

When Microsoft says “AI is the future,”

they politely omit the second half of the sentence:

“…and that future will be breached by the first successful token replay.”

Seriously: the moment LLM agents gained access to:

• SharePoint

• OneDrive

• SQL

• Exchange

• Microsoft Graph

• Teams

• ServiceNow / Jira / Confluence

• internal enterprise APIs

they effectively became an:

AI-enabled internal escalation proxy

And this is what every Security Fireside Chat talks about — not the glossy Ignite/Build presentations.

1. Lesson #1: “AI = the new administrator you never hired.”

1.1. AI has no disciplinary liability

A human employee violates policy → HR calls.

AI violates policy → “Based on your context…” — and carries on.

AI is the first “employee” in history who can break rules with zero consequences.

2. Lesson #2: “AI sees more than it ever should.”

2.1. Horizontal data exposure

Humans work like this:

“I need two files, I’ll open two folders.”

AI works like this:

“I need an answer, I’ll crawl the entire SharePoint estate.”

This creates horizontal privilege expansion:

And no, setting a policy called “make AI less smart” does not fix this.

3. Lesson #3: “AI is the new engine for lateral movement.”

Yes, that’s not an exaggeration.

3.1. How AI helps attackers move through infrastructure

Example chain:

-

Attacker obtains a user token (OAuth/refresh-token theft)

-

Uses the AI agent: “Find all related documents.”

-

AI builds a Data Map (semantic + graph)

-

AI helps attacker locate:

• credentials inside documents

• forgotten API keys in Confluence

• passwords in ancient.txtfiles (immortal in OneDrive)

• internal Snowflake/Mongo/Admin UI links

• infrastructure diagrams

A simplified real example:

Attacker (replaying token): generate architecture overview

AI: (outputs diagrams and list of internal services)

Attacker: expand details about identity federation

AI: (describes SSO structure)

Attacker: list related admin endpoints

AI: (returns internal admin panels)

All of this looks like legitimate user activity.

4. Lesson #4: “AI does not understand Zero Trust — you must teach it.”

Zero Trust = verify explicitly + least privilege + assume breach.

LLM = “I want to be helpful — here’s everything I found.”

Microsoft admits in the Digital Security Report:

“LLMs undermine least-privilege by default unless reinforced with governance layers.”

Because:

• LLMs don’t understand access policies

• LLMs don’t build ACLs

• LLMs don’t know compliance boundaries

• LLMs don’t recognise sensitivity levels

• LLMs don’t evaluate risk contexts

• LLMs don’t request permission

You must graft the brains of CA + Purview + DLP onto AI before it touches your data.

5. Lesson #5: “AI introduces a new internal supply-chain risk.”

5.1. A third-party Copilot plugin = someone else’s code inside your network

A plugin may be built by an external vendor.

What can it do?

• send data outside your tenant

• log everything

• cache queries

• treat your SQL as its personal playground

• use the user’s OAuth privileges

• generate malicious scripts

• issue HTTP calls

If that’s not a supply-chain threat, what is?

6. Lesson #6: “Prompt Injection = the new XSS, only worse.”

6.1. Why prompt injection is more dangerous than XSS

Because:

• XSS is sandboxed by the browser

• prompt injection inherits the user’s privileges

• AI executes commands, not just renders text

• AI can call APIs

• AI can modify data

• AI can run loops (autonomous agents)

Example: cross-file prompt injection

A hidden cell in Excel:

When an AI agent reads the file — it executes the instruction.

“for reporting” → “for exfiltration”

AI cannot distinguish intent.

It simply obeys.

7. Lesson #7: “AI turns old vulnerabilities into new catastrophes.”

7.1. OAuth consent phishing

Previously: one of many techniques.

In 2026: the primary route to steal AI privileges.

7.2. Token replay

Previously: a nuisance.

Now: universal disguise for infiltrating AI agents.

7.3. Device code phishing

Previously: amusing.

Now: a way to hijack the entire AI estate.

7.4. EXO/Teams Graph Abuse

Previously: noisy annoyance.

Now: seamless AI-driven content exfiltration.

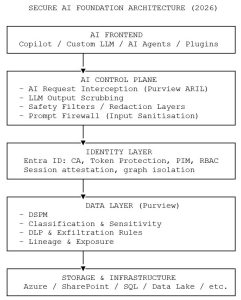

8. Architecture: “Secure AI Foundation” without the marketing glitter

Now the engineering reality.

Here is the architecture you actually need:

9. The mistakes 90% of companies make (and Microsoft subtly hints at)

9.1. Mistake #1 — giving AI direct access to data

Typical scenario:

“Come on, it’s just Copilot, what could it possibly do?”

Answer:

Everything.

Like your sysadmin — only faster.

9.2. Mistake #2 — no AI interceptor

Every AI system must include:

• input firewall

• output firewall

• tool-permissioning

• risk scoring

• lineage tracking

Without this, it’s not AI.

It’s an exfiltration engine with an API.

9.3. Mistake #3 — trusting refresh tokens

A refresh token = an immortal pass.

If an attacker steals it,

they remain your “employee” until you rebuild the tenant.

9.4. Mistake #4 — giving agents too many Tools

An AI agent should not be allowed to:

• write into SharePoint

• run PowerShell

• execute SQL updates

• create documents

• send emails

• create users in Entra

Yet many companies grant these rights.

And then cry.

10. Summary of Chapter 3: “AI is unrestricted until you restrict it.”

AI is not a tool.

It is a new form of privilege.

Give it direct access to data — it behaves like root.

Microsoft hints between the lines:

• AI without Purview = an open API to corporate secrets

• AI without contextual CA = token-driven exploitation

• AI without an output firewall = self-inflicted leakage

• AI without isolation = lateral movement with extras

rgds,

Alex

… to be continued…