0. Why Purview isn’t “just another admin console” but your company’s built-in self-preservation mechanism

Purview 2026 is no longer MIP + Compliance Center circa 2020–2022.

It has evolved into a full-blown combination of:

• Data Security Posture Management (DSPM)

• AI Safety & Governance Layer

• Unified Data Classification Engine

• Zero Trust Data Enforcement

• Copilot-aware DLP controls

• Risk simulation / risk forecasting models

• Auto-labeling via ML clusters

• Data lineage + data maps + sensitivity propagation

And the funniest part?

In 2023 Purview looked “slow and annoying.”

In 2026 it’s the only thing preventing your payroll spreadsheet from being leaked by Copilot directly onto LinkedIn.

1. Purview DSPM: dissected under a microscope

In 2026 Microsoft positions DSPM not as an “audit tool” but as a cybernetic feedback loop — one that treats data the way the immune system treats viruses.

1.1. DSPM internals (“under the bonnet”)

| Module | Purpose | Actual engineering meaning |

|---|---|---|

| Data Discovery Engine | Scans storage, documents, tables | Crawling + pattern matching + ML classifiers |

| Exposure Analytics | Determines access risk levels | Who, where, why and how someone can read a file |

| Data Behaviour Models | Analyses user behaviour | AI evaluation of 135+ access signals |

| AI Path Analysis | Tracks how AI agents use data | Model: Input → Execution → Output |

| Data Lineage Tracking | Tracks data “ancestry” | A genealogical tree of files |

| Auto-Label Engine | Automatic label application | ML trained on real enterprise datasets |

Purview DSPM is not a static audit — it’s a living model. It continuously monitors:

• what is happening to your data,

• who is touching it,

• how AI interacts with it,

• and where tomorrow’s weak points will emerge.

2. AI-ready governance: Purview as the “air-traffic controller” for AI

In 2026, Purview learned to recognise:

• AI-agent queries,

• Copilot for M365 contextual operations,

• plugin/tool/skill commands,

• data access initiated by LLMs via Microsoft Fabric, Semantic Index, Graph Connectors.

This allows Purview to observe previously invisible AI data-consumption chains:

Purview sees all of it.

Real-world examples already surface:

“AI-agent requested 12 high-risk datasets without explicit justification.”

3. Purview Classification Engine — how it actually understands your tables

Purview classifies data using four layers:

3.1. Pattern-based

Regexes, dictionaries, keywords.

Effective but primitive.

3.2. ML-based classifiers

Models trained on:

• financial documents

• HR datasets

• NDAs

• medical records

• government forms

• legal contract templates

They understand context.

For example, “salary” inside a payroll table ≠ “salary” inside a job advert.

3.3. Contextual propagation

When one file is classified, Purview propagates its labels to:

• copies

• derivatives

• AI-generated content

• attachments

• summaries/extracts

This is critical.

If Copilot generates a document based on a Confidential file, the output also inherits Confidential.

4. Purview + Copilot + AI Agents = an architecture of risk

4.1. Why governance must be placed at the AI layer

AI models:

• submit queries on behalf of users,

• execute commands,

• create files,

• read databases,

• call APIs,

• generate scripts.

That is full access, zero understanding, and no innate sense of policy.

Purview is the only component standing between:

“model wants data” → “model gets data”.

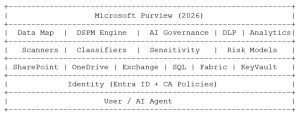

5. Purview Architecture 2026 — deep R&D breakdown

Engineers’ diagram (not marketing fluff):

6. How Purview prevents AI-driven data leaks (technical mechanisms)

Microsoft added five critical safety layers.

6.1. AI Request Interception Layer (ARIL)

This module:

• inspects every AI-generated request,

• evaluates data sensitivity,

• compares against policies,

• determines response risk,

• logs entire chains (“Input → Data → Output”).

If Copilot attempts to fetch salary tables, ARIL returns:

This is semantic enforcement, not keyword checking.

6.2. AI Output Scrubbing Engine

This scans:

• text,

• code snippets,

• SQL,

• JSON,

• tables,

• attachments.

If the model outputs PII:

• it is redacted,

• masked,

• rewritten,

• or blocked.

This is a security gateway layered on top of the LLM.

6.3. Data Lineage Tracking + AI interactions

Purview can construct full graphs like:

If any step leaks, Purview surfaces it.

6.4. Zero-Trust Enforcement for AI

Even if:

• the user has access,

• Copilot has access,

• data resides inside Microsoft Cloud,

Purview still enforces:

• CA evaluation (context-driven),

• justification prompts,

• output restrictions.

This breaks the old model of

“AI = trusted super-user.”

6.5. AI Risk Simulation Engine

Purview analyses:

• data-usage patterns,

• agent behaviour,

• historical user actions,

• anomalies,

• potential leakage flows.

Then forecasts:

“High probability of sensitive data being exposed via AI output within 30 days.”

This is the first enterprise-grade tool performing AI-native risk prediction.

7. Purview API Layer: where things break — and where they’re protected

Purview 2026 includes:

7.1. Data Governance API

Label operations, policy creation, auditing.

7.2. DSPM API

Used for:

• pulling risk insights,

• triggering scans,

• managing exposure controls.

7.3. AI Governance API

A middleware layer between:

• Copilot Studio,

• Azure AI Foundry,

• Semantic Kernel Agents,

• Graph Connectors.

Attackers love it because it allows:

• metadata queries,

• dataset probing,

• dependency inference.

Purview counters with:

• monitoring,

• Defender signals,

• query blocking when behaviour turns suspicious.

8. Where Purview breaks (because no system is perfect)

8.1. Purview cannot see custom AI tools by default

If you wired your own LLM via a REST endpoint, Purview sees nothing until you:

• enable AI Monitoring,

• onboard the tool into AI Governance scope.

8.2. Purview cannot stop leaks through cameras/smartphones

Human-factor leakage remains unsolved:

employee takes a photo of a Copilot output → Purview loses visibility.

8.3. Purview cannot scrub the model’s internal memory

If the LLM retains hidden patterns (“memory leakage”), Purview cannot purge them.

9. Final verdict: “A miracle tool? No. A necessary evil? Absolutely.”

Purview is:

• not a silver bullet,

• not flawless,

• not omniscient.

But without it, AI becomes “leakage-as-a-service.”

In 2026, AI security = data governance.

And Purview is the core of that architecture.

rgds,

Alex

… to be continued…