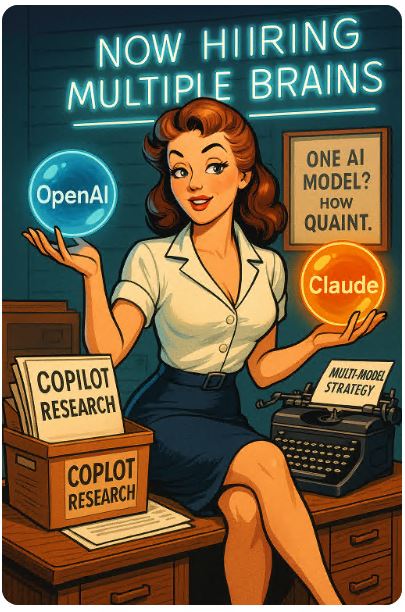

Big news: Microsoft is no longer content with leaning only on OpenAI. They’re bringing Anthropic’s Claude models (Sonnet 4, Opus 4.1) into Microsoft 365 Copilot. You’ll soon be able to pick whether your Copilot uses OpenAI or Claude in certain contexts. Welcome to the multi-model era.

What’s Actually Changing

-

Copilot’s Researcher agent can now run on either OpenAI’s deep reasoning models or Anthropic’s Claude Opus 4.1. That means when you ask Copilot to “analyze market trends” or “build a strategic doc”, you can choose which brain you want doing the heavy lifting.

-

In Copilot Studio, where you build your own agents and AI workflows, Claude Sonnet 4 and Opus 4.1 have joined the line-up of available models. You can mix & match: arguably pick Claude for some tasks, OpenAI for others.

-

Microsoft assures us: OpenAI is not being thrown out. It remains the default model in many cases. Claude is optional, selectable by choice or by workflow.

-

One catch: Claude models are hosted outside Microsoft’s managed environments (Anthropic currently uses AWS). So there are latency, data-residency, contract, and compliance implications.

Why Microsoft Is Doing This

This isn’t just a “hey let’s add another AI option” whim. There are sharp motives and strategic reasons:

-

Reduce dependence on OpenAI

If you put all your AI eggs in one partner’s basket, you risk being vulnerable to pricing, roadmaps, or corporate changes. Microsoft is diversifying. -

Leverage domain strengths

Claude may outperform in certain reasoning, compliance, or safety dimensions. Microsoft is playing to pick the best tool for the job rather than forcing one tool. -

Innovation & competition

Encouraging multiple models stirs competition internally and externally. That’s healthier than stagnation. -

Customer flexibility & differentiation

Different customers have different requirements (cost, regulation, data locality). Allowing model choice helps tailor Copilot to business constraints.

Risks & What Microsoft Needs to Nail

Because yes, there are risks. Always are.

-

Latency & performance hit

Using Claude models hosted off-Microsoft’s controlled environment means extra hops, potential lag, and inconsistency. -

Data governance & compliance

When your data is processed by non-Microsoft infrastructure, you must ensure that privacy, lawful use, and regulatory compliance don’t get broken. -

Model switching experience

Users will try both models. If Claude gives inconsistent or weird outputs, they’ll blame Microsoft. The switching mechanism must be clean and reliable. -

Operational complexity

Internally, Microsoft now has dual pipelines to maintain: performance, integration, monitoring for both OpenAI and Claude models. That’s extra overhead. -

Versioning and feature gaps

OpenAI’s models may get updates faster in Microsoft’s stack. Claude may lag in features or performance in some niches. -

Vendor trust & dependency shifts

Hosting Claude on AWS (a cloud competitor) adds tension. Microsoft is effectively relying on potential rivals to host parts of its Copilot experience.

What You Should Do (If You’re a Business Thinking of Copilot)

If I were advising an IT or AI team, here’s how I’d approach this:

-

Pilot with both models

Use existing workflows, ask the same prompts via OpenAI and Claude. Compare quality, speed, consistency. -

Check compliance & legal

Verify where data is processed, how logs are handled, what contract terms are in place. -

Plan fallback & switch logic

If Claude is disabled or fails, agents should revert to OpenAI automatically. -

Set usage guardrails

Use access controls: who can pick Claude, in what use cases. Don’t let everyone flip models willy-nilly. -

Monitor performance & SLA impact

Track latency, errors, timeouts. If switching models degrades user experience, rollback or restrict. -

Consider cost trade-offs

Claude might cost differently (API, licensing). Know how switching models affects your OPEX.

My Verdict

Microsoft weaving Claude into Copilot is a savvy strategic move. Not because Claude is suddenly “better” in every scenario, but because choice is strength — both for Microsoft and for customers.

If this is done well: we’ll get more resilient, modular Copilot experiences; better alignment to use cases; and less risk that OpenAI becomes a bottleneck or point of failure.

If it’s done poorly: users get confused, performance suffers, data leaks happen, and Microsoft ends up with two messes instead of one.

But I’m optimistic — this could be the pivot that turns Copilot from “one-size-fits-all AI assistant” into a customizable, task-aware toolset. Provided Microsoft doesn’t get lazy with integration, security, and support.

Regards for monday,

yours,

Alex