0. Prologue:

“An AI platform isn’t a model. It’s an organism.”

It has:

• blood (data),

• an immune system (Purview + ZTA),

• a nervous system (identity),

• a brain (the LLM),

• organs (tools/plugins),

• muscles (the execution layer),

• skin (the output firewall),

• memory (vector stores),

• an immune response (Defender + analytics),

• a skeleton (governance + policies),

• metabolism (pipelines),

• and metabolic illnesses (drift, poisoning, leaks).

If you want it to live — you must design it like a living system.

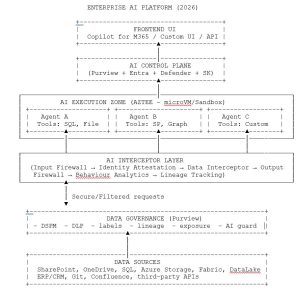

1. The Overall Topology: Enterprise-grade AI Platform

Here it is, end-to-end:

This is the single architecture Microsoft now quietly promotes to strategic clients under the banner of an “AI Security Posture Workshop”.

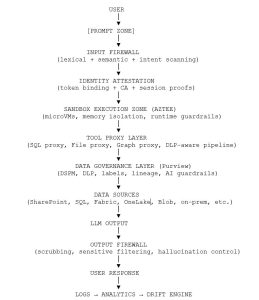

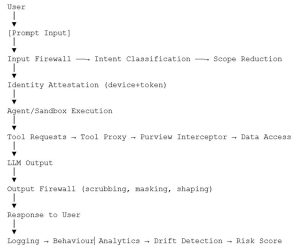

2. AI Data Flow Blueprint (critical diagram)

Full chain: from user request → to answer, from an infra & security standpoint:

3. Trust Boundaries — the new geography of security

The 2026 AI platform has nine trust zones, each critical:

Z0: User Zone

User, device, MFA, CA signals

Z1: Prompt Zone

Everything fed to the model; jailbreak ground zero

Z2: Input Firewall Zone

Lexical & semantic filtering

Z3: Identity Zone

Token, device claims, attestation, session key

Z4: Execution Sandbox Zone

Agent container, runtime isolation

Z5: Tools Zone

SQL/File/HTTP plugins — the most dangerous layer

Z6: Data Interceptor Zone (Purview)

DSPM, DLP, exposure control

Z7: Output Firewall Zone

Redaction, hallucination blocking, sensitive shaping

Z8: Analytics Zone

Drift detection, anomaly detection

Every zone must remain isolated.

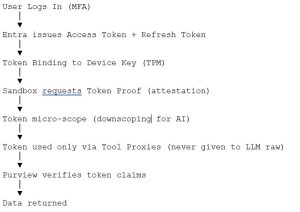

4. Token-Flow Architecture (the identity spine)

LLMs operate with tokens, not “permissions”.

Tokens are identity.

Identity is the primary attack surface.

Full 2026 token chain:

Key protections:

• Token Binding

• Session key rotation

• Scope reduction

• Token cloaking (LLM never sees tokens)

• Agent-specific access tokens

• Proxy-only usage

• CA enforced on AI actions

Without this — AI breach = identity breach.

5. Data Governance Blueprint (Purview R&D)

Purview acts as:

• IDS

• Firewall

• DSPM

• DLP

• Classifier

• Risk engine

• Lineage system

• AI guardrail layer

Every AI data request flows like this:

Purview performs:

• sensitive inference detection

• exposure scoring

• exfiltration blocking

• label propagation

• output rewriting

• lineage propagation

• AI drift mapping

It’s the world’s first data-centric Zero Trust for AI.

6. Execution Sandbox Blueprint (AZTEE)

Agent-Level Zero Trust Execution Environment

Sandbox must provide:

6.1. Isolation

• Firecracker microVM

• gVisor

• network egress off

• no shared memory

• no raw filesystem

• no raw sockets

6.2. Controls

• reset context every N steps

• memory wipe

• tool whitelist

• rate limits

• I/O length caps

• scoring hooks

6.3. Internal Logging

Everything must be recorded:

tool calls, proxy decisions, token usage, SQL queries.

7. Toolchain Blueprint

Tools are the “hands” of the LLM.

7.1. MUST HAVE:

• read-only by default

• proxy-based access

• sensitivity filters

• exposure limits

• rate limits

• intent signature

• lineage hooks

7.2. MUST NOT:

• write files

• send emails

• hit external APIs

• access SQL without views

• accept raw user input

• chain tools blindly

8. Output Firewall Blueprint

Your last line of defence.

8.1. Scrubbing Engine

Removes PII, PHI, secrets, URLs, identifiers, project names.

8.2. Structure Normalisation

Checks JSON, CSV, tables, SQL, etc. for exfil patterns.

8.3. Hallucination Filter

Blocks:

• over-precise answers

• reconstructed PII

• suspiciously “realistic samples”

9. Behaviour Analytics Blueprint

Using Defender + Entra + Purview fusion.

Detects:

• non-human-scale access patterns

• enumeration

• semantic exfiltration

• drift

• unusual chaining

• “representative dataset” leak attempts

10. Full Enterprise Blueprint (final architecture)

Every AI deployment must pass:

• policy tests

• prompt-injection tests

• tool-abuse tests

• DLP simulation

• sensitivity checks

• behaviour regression

• drift analysis

• vector-store integrity

Any failure = rollback.

12. Security Posture Blueprint — Key Metrics

Data Exposure

• sensitive access frequency

• cross-domain queries

• multi-hop queries

Identity

• token replay attempts

• MFA bypass

• attestation failures

Behaviour

• anomaly score

• drift score

• hallucination delta

• PII-in-output frequency

Supply Chain

• plugin signature mismatch

• vector store integrity errors

13. Governance Blueprint

Policies must be:

• IaC

• version-controlled

• auditable

• testable

• immutable

All Purview, Entra, Copilot, Tooling and Sandbox policies must live in Git.

14. Conclusion

This is your 2026 AI architecture.

In short:

AI without security = catastrophe.

AI with security = business accelerator.

And only an architecture built on:

• Purview

• Entra

• Defender

• Sandboxing

• Toolchain isolation

• Output Firewall

• Analytics

• Drift Detection

• Token Binding

makes corporate AI suitable for use-cases — rather than for obituaries.

at the end

Full Series Summary – “AI Security Architecture 2026”

This 8-chapter series maps the real architecture of enterprise-grade AI in 2026 — not the glossy Copilot demo version, but the brutal, engineering-level reality sitting underneath.

Modern AI platforms aren’t “models”. They are multi-layered organisms with identity, memory, tools, metabolism, and failure modes. And every layer — from tokens to toolchains, from semantic stores to output filtering — is a security boundary waiting to be breached.

Across the series, we break down the full stack:

CH1 — The AI Threat Landscape 2026

Why AI attacks scale faster than anything seen in classical cybersecurity. Token theft, semantic inference, prompt-based RCE, LLM-driven lateral movement — and why legacy defences fail.

CH2 — AI Identity & Token Security

Identity is no longer “user-only”. Models inherit user tokens, device claims, session keys. We cover TPM-bound token protection, device attestation, session proofs, and how to stop AI-powered identity replay.

CH3 — Input Firewalls & Prompt Security

Text is now an attack vector.

We detail lexical, semantic, and intent-based filtering, token rewriting, anti-jailbreak algorithms, content scope reduction and role-aware request gating.

CH4 — AI Sandboxing & Runtime Isolation

LLM execution cannot run “in production”.

We describe microVM isolation (Firecracker, gVisor, Kata), context-reset strategies, memory guardrails, rate limiting and runtime kill-switches.

CH5 — Toolchain Security & Proxy Architecture

Tools make AI powerful — and dangerous.

We show how SQL/File/HTTP tools must run through proxy layers with DLP, schema rewriting, intent contracts, rate limits, lineage hooks and strict read-only views.

CH6 — AI Zero Trust Architecture (ZTA 2026)

The core blueprint:

AI Identity → Input Firewall → Execution Sandbox → Purview Data Interceptor → Output Firewall → Behaviour Analytics.

Zero Trust is no longer about users — it’s about models.

CH7 — AI Security Engineering & R&D

The deep dive: poisoning defence, semantic memory attacks, vector store integrity, adversarial testing, supply-chain validation, agent-level isolation and multi-layer output scrubbing.

CH8 — The Final Enterprise Blueprint (2026)

A full architectural map of a protected AI platform:

Control Plane → Interceptor Layer → AZTEE Sandbox → Tool Proxies → Purview Governance → Output Firewalls → Analytics & Drift Engine → CI/CD for AI Policies.

What this series ultimately shows

Enterprise AI must be engineered like a high-risk critical system.

The only safe AI is:

Token-bound

Sandboxed

Proxy-restricted

Governance-controlled

Output-filtered

Monitored

Isolated

Attested

and Drift-aware.

No shortcuts.

No “just deploy Copilot”.

No “LLM inside the production network”.

This series offers the full blueprint — the one real organisations will rely on to avoid a future incident report instead of a success story.

Have a nice holidays my dear friends,

rgds,

Alex