Hey Hey exactly as I promised. at LinkedIn

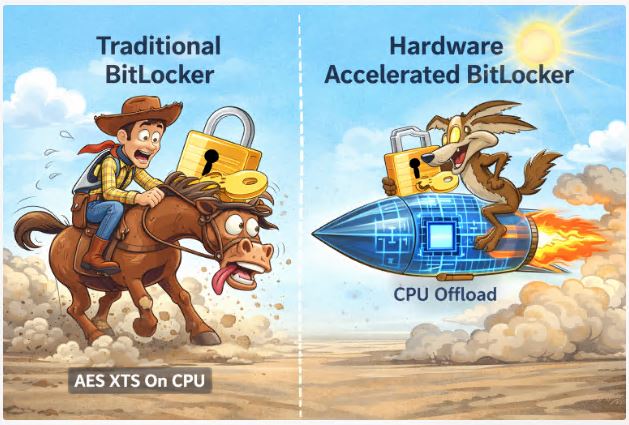

At December 2025 Microsoft has introduced hardware accelerated BitLocker, and once you remove the press release language, what this really represents is a relocation of critical cryptographic workload from the general purpose CPU into a dedicated hardware domain within the processor or SoC. The algorithm remains the same. The execution architecture changes.

BitLocker continues to use AES XTS 256 as the primary full volume encryption mode. XTS applies encryption per 512 byte or 4K sector using two keys and a tweak value derived from the logical sector number. Until now, each AES operation was executed on the main CPU cores, typically via AES NI on x86 or equivalent cryptographic extensions on ARM.

AES NI is hardware assisted at the instruction level, but it still consumes general compute cores. Under heavy I O workloads, every read and write generates additional cryptographic pressure on the CPU scheduler, cache hierarchy and memory bus.

The previous data path

The processing flow traditionally looked like this:

A read or write request is initiated by the file system

The BitLocker filter driver intercepts the IRP

Data is passed into the cryptographic layer

The CPU executes AES XTS via AES NI

The result is returned into the storage stack

Even with AES NI, this requires context switching, key loading into registers, driver level coordination and reintegration into the storage pipeline.

On high throughput NVMe devices delivering several gigabytes per second, the volume of AES operations scales directly with data movement. The CPU begins competing between cryptographic operations and user space workloads.

The new path with hardware offload

In hardware accelerated mode, the routing changes.

BitLocker remains a filter driver in the storage stack, but instead of performing AES XTS on shared compute cores, it delegates bulk encryption operations to a dedicated cryptographic engine embedded in the SoC.

This engine provides:

Hardware level AES XTS 256 execution

Hardware based tweak generation

Isolated handling of temporary encryption keys

The critical difference is that the bulk encryption key is no longer processed as extensively within the shared CPU execution context. It is wrapped and operated upon inside a hardware domain that is isolated from conventional system memory usage.

The CPU remains responsible for orchestration, but it no longer executes the millions of AES rounds directly. This reduces ALU utilisation, cache pressure and memory bus contention.

Integration with TPM and Secure Boot

The trust model does not change. TPM continues to protect the volume master key and enforce protection during boot. Secure Boot maintains integrity of the boot chain.

The distinction lies in post unlock behaviour. Once the volume is unlocked, bulk encryption operations can be executed within the hardware cryptographic block rather than on the main cores.

This reduces exposure to certain attack classes such as memory scraping or DMA based extraction attempts. The less frequently encryption keys circulate in general RAM, the smaller the attack surface becomes.

Scheduler and power impact

Offloading AES workloads to a dedicated engine reduces the number of cryptographic instructions executed on the main cores. This results in:

Fewer context switches

Reduced contention between storage I O and user workloads

Lower cache stress

On mobile systems this also reduces the frequency at which performance cores need to enter high power states. The SoC can process encryption using lower energy pathways, improving overall battery efficiency.

On ARM systems with heterogeneous core design, this is particularly relevant. Encryption no longer forces sustained usage of high performance cores, which improves thermal behaviour and sustained efficiency.

Compatibility and policy considerations

Hardware offload does not activate in all configurations. Several conditions must be satisfied:

A supported SoC or CPU with an integrated cryptographic engine

Compatible storage stack drivers

Use of supported encryption methods such as AES XTS 256

No conflicting Group Policy or MDM settings

The current mode can be verified using:

manage bde status

If hardware acceleration is active, the encryption method will indicate this.

If policies enforce legacy encryption configurations or unsupported parameters, the system will revert to software execution.

Comparison with self encrypting drives

It is important not to confuse hardware accelerated BitLocker with self encrypting drives such as OPAL SSDs. In the OPAL model, encryption is entirely performed within the storage device firmware and the operating system manages authentication and key provisioning only.

With hardware accelerated BitLocker, encryption remains under Windows control. This means:

Continued integration with TPM

Full compatibility with existing BitLocker management policies

No dependency on SSD firmware security implementation

This distinction matters because storage level encryption has previously been criticised for inconsistent firmware security and implementation flaws.

Practical impact

On supported hardware platforms:

Encrypted NVMe performance approaches unencrypted throughput

CPU resources are freed for user and system workloads

Energy consumption under heavy I O decreases

For enterprise environments this removes one of the historical objections to full disk encryption, namely measurable performance degradation under sustained storage load.

Final perspective

Hardware accelerated BitLocker does not introduce a new cryptographic algorithm. It does not alter the trust chain. It does not redefine Windows disk encryption policy.

It relocates execution.

The same AES XTS 256 algorithm continues to operate. The same TPM backed key protection model remains intact.

The difference lies in where millions of AES rounds per second are computed.

In a landscape where storage throughput continues to increase, moving cryptographic workload closer to silicon is not a marketing flourish. It is an architectural necessity.

rgds,

Alex